ComplexModel

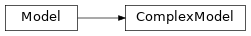

ComplexModel inheritance diagram

- class savant.base.model.ComplexModel(local_path=None, remote=None, model_file=None, batch_size=1, precision=ModelPrecision.FP16, input=ModelInput(object='auto.frame', layer_name=None, shape=None, maintain_aspect_ratio=False, scale_factor=1.0, offsets=(0.0, 0.0, 0.0), color_format=<ModelColorFormat.RGB: 0>, preprocess_object_meta=None, preprocess_object_image=None), output=ComplexModelOutput(layer_names=[], converter='???', attributes='???', objects='???'))

Complex model configuration template. Validates entries in a module config file under

element.model.Complex model combines object and attribute models, for example face detector that produces bounding boxes and landmarks.

- batch_size: int = 1

Number of frames or objects to be inferred together in a batch.

Note

In case the model is an NvInferModel and it is configured to use the TRT engine file directly, the default value for

batch_sizewill be taken from the engine file name, by parsing it according to the scheme {model_name}_b{batch_size}_gpu{gpu_id}_{precision}.engine

- input: ModelInput = ModelInput(object='auto.frame', layer_name=None, shape=None, maintain_aspect_ratio=False, scale_factor=1.0, offsets=(0.0, 0.0, 0.0), color_format=<ModelColorFormat.RGB: 0>, preprocess_object_meta=None, preprocess_object_image=None)

Optional configuration of input data and custom preprocessing methods for a model. If not set, then input will default to entire frame.

- local_path: Optional[str] = None

Path where all the necessary model files are placed. By default, the value of module parameter “model_path” and element name will be used (“model_path / element_name”).

- model_file: Optional[str] = None

The model file, eg yolov4.onnx.

Note

The model file is specified without a location. The absolute path to the model file will be defined as “

local_path/model_file”.

- precision: ModelPrecision = 2

Data format to be used by inference.

Example

precision: fp16 # precision: int8 # precision: fp32

Note

In case the model is an NvInferModel and it is configured to use the TRT engine file directly, the default value for

precisionwill be taken from the engine file name, by parsing it according to the scheme {model_name}_b{batch_size}_gpu{gpu_id}_{precision}.engine

- remote: Optional[RemoteFile] = None

Configuration of model files remote location. Supported schemes: s3, http, https, ftp.

- output: ComplexModelOutput = ComplexModelOutput(layer_names=[], converter='???', attributes='???', objects='???')

Configuration for post-processing of a complex model’s results.